Signals & Correctives: Megathread

This just keeps coming up in the chat, and Crispy and I did a little podcast convo about it today. Original Nerst post for the uninitiated.

A lot of threads to pull on here I think, from Hegelian dialectic to fashion to evolutionary epistemology. Indexicality vs generalization. The way people with roughly similar assessments can end up seeming highly polarized by "rounding up" vs "rounding down." I remember reading a Sawyer County health center press release—they were bragging about being COVID yellow alert level instead of orange level like neighboring counties; when I looked at the sick numbers, they were within like .1%. Rounding problems are a big deal! You can't enforce a discrete taxonomic system on a continuous clusterspace having exaggerated difference!

-

"Torque epistemology": we can contrast with to "map epistemology." Our diagnoses of the world aren't in-a-vacuum value-neutral attempts to map a space as accurately as possible. They're attempts to "twist the stick in the mud," as Bourdieu puts it.

-

What happens when correctives (figures) lose their signal (ground)? Is this why you have to read philosophy backward?

-

Is this why "Marx was not a Marxist"? He was twisting the stick, but his rebellion became a kind of orthodoxy? And does this have something to do with the pernicious way subversive, liberatory movements always inevitably turn into oppressive regimes—see Christ's Christianity?

-

@hazard's "not all / at least one"—we all agree there are exceptions to our preferred narratives; the question is how common they are.

-

Two approaches to resolving the apparent contradictions of two high-level narratives: either (1) bite the bullet on the premise/carving/level of abstraction, draw up criteria for what things count as an example, and then randomly sample it—this is super hard or impossible for most social, political, moral stuff—or else (2) get more concrete, get into the particulars, find a difference that makes a difference.

Linked from:

hazard

hazardRecording some distinctions you made earlier:

We've been using Signal/Corrective differently from Nerst. We have been using it to express one's motivation for making a claim, and he uses it to express the semantic content of a claim. We've been using it as "the reason I'm saying 'not X' is because I feel I'm surrounded my this dominant ideology that says 'X!' (that's the signal) and I need to correct it". Nerst uses it like "We could both say the sentence 'yes X' but it can be that case that I see 'yes X' as the main substantive important thing (the signal) and you see it as a less important hedge/clarification (the corrective).Seems reasonable to leave S/C to nerst. I just spent a bit too long brainstorm a new handle:

- What's the thing?

- Conceptual

- Memetic

- Idealogical

- Narrative

- Getting at the "that's relevant to you and that manifests in your life and experience"

- Filter Bubble

- Backyard

- Neighborhood

- House

- Collision Domain

- What are you doing to it?

- Purification

- Bath water drain

- Baby Catch

- Rebuttal

- Adjustment

- Counter

- Correction

- Path

- Response

- Critique

- Normalization

Of all of those, I'm kinda in love with memetic collision domain correction.

I like collision domain over filter bubble because filter bubble makes me focus more on the possibility that there's a lack of conceptual diversity, and collision domain captures "the shit that bumps into you and you into it" while still retaining that people can have wildly disjoint collision domains. Memetic and correction also feel like natural choices.Downside is the "dominant ideology" part sorta get's implicitly folded into "correction" (I'm making this correction because I see it getting ignored in my collision domain). Though I'm okay with that because it never feels right for me to say "dominant ideology" without saying "in this filter bubble, social circle, collision domain".

- What's the thing?

In reply tosuspendedreason⬆:hazard

In reply tosuspendedreason⬆:hazardThe more I ponder this, the less clear it is to me that I have ever cared about an actual fraction when I have made a quantifier/generalizing statement. Well, at least in the context of things that end up as arguments with people. Like, take "more often than not", and a sentence like "more often than not, a disagreement is rooted in miscommunication". The most straightforward denotational meaning I can draw from that is "in over 50% of arguments, the root cause is miscommunication" and the only way I can think of rn to turn that into something else meaningful is "if I know nothing about an argument, begin approaching it as if it was caused by a miscommunication" and then I notice, wait a god damn fuck.... "if I know nothing about an argument"? If I'm in an argument, I know something about it! I know a lot about it! Why would I care about a zero info probabalistic guess?

Okay, sometimes I'm talking with someone, and they tell me something like "I had an argument with my partner". K, that's an argument I don't know anything about. Actually not true, I know this person and have prior info. But even if if I didn't, "act as if it was caused by miscommunication" seems strictly less useful than "ask more details about what happened, suggest the possibility of miscommunication if it seems relevant".

Like, if I'm trying really hard I can construct a scenario: I do trouble shooting over the phone. I talk to hundreds of people about their tech problems. I record shit tons of symptom-solution pairs and use that to make a 20 questions decision tree that's info theory optimal. I crank out more successful calls per week and get paid more. But now it's interesting because it's this whole decision tree that I've made, and not just a single "more often than not X". The decision tree presupposes rapidly trying and testing different things. It also presupposes shit tons of knowledge on my end to actually take care of the details, and the decision tree just helps me optimize high level flows through the problem space.

Nothing in my life jumps out as being like that.

Sometime soon I'm going to go back through a lot of my past writing, find all my generalizations, and see if with putting in more effort I can find the concrete specific I actually wanted to communicate.

In reply tosuspendedreason⬆:suspendedreason

In reply tosuspendedreason⬆:suspendedreasonConversation me n @crispy did about S&Cs, and "twisting the stick": https://pfeilstorch.substack.com/p/torque-epistemology

The uses were still pretty blended in my mind as of the conversation, I'm still working to untangle things.

In reply tosuspendedreason⬆:suspendedreason

In reply tosuspendedreason⬆:suspendedreasonFirst question: what are some ways people have gotten use out of this term?

is Arendt trying to provide a charitable reclamation of an outmoded frame signal-corrective style?

discovering a process is way harder than discovering a singular effect, and that involves a lot of signal corrective as to what node in the process you're looking at

When the data set under discussion is itself vague, there's no way around all participants being hazy on where exactly, on the spectrum between NA and ALO, the judgment falls, and people end up framing it like overrated/underrated signal-corrective style, but they end up talking past each other because they have different understandings of the signal

but i feel like you're doing a signal corrective here and im not

my only fear is throwing the baby out with the bathwaterSnav has talked about the inverse pipeline, which might be closer to your experience: hanging a lot in STEM programs with naive positivists/rationalists too quick to discount continental traditions

And it's telling that he's ended up in the comment sections of SSC posts pilling rationalists on Freud or Lyotard, whereas I wrote a book trying to insert blogosphere thought into the NYC arts & culture scenee.g. the formulation of a bravery debate, which I think John Nerst basically picks up on and turns into "Signals and Correctives"

So, in order:

- Charitable reclamation of something out-of-fashion/underpriced ("buying low"?)

- There's a tug-of-war of attention/causal attribution to one or another side of things—things get oversaturated and then people argue for the "slept-on" position

- A lot of arguments of the form "X is bad" or "X is good" are actually arguments that "X is overrated" or "X is underrated": in the full set of X, instances will be a mix of good and bad, and people will have certain high-level preferences for or against X-style solution.

- There's a strong version and a weak version of a position, and often when we support X we frequently support the strong version against individuals criticizing the weak version. This means people can more or less agree on X but take different sides and talk past each other. People have chosen to support or criticize X depending on whether they think X is under- or overrated by public opinion

- People end up responding to the bad arguments in their current or formative milieus. When two different milieus have the same kind of biases but in different directions, individuals in those milieus who hold "the same" assessments can end up taking very different stances on the same issue. Because the weak version proliferates, it is more commonly encountered and argued against, but this can lead to people ignoring the merits of the strong version.

- Everyone thinks their view on a hot issue is persecuted/precarious, and overstates the strength of their opposition. This leads to them holding themselves to lower standards or even "playing dirty."

From "Against Bravery Debates":

Then we get into more subtle forms of selection bias. Looking at the articles above, I am totally willing to believe newspapers are more likely to blaspheme Jesus than Mohammed, and also that newspapers are more likely to call a Muslim criminal a “terrorist” than they would a Christian criminal. Depending on your side, you can focus on one or the other of those statements and use it to prove the broader statement that “the media is biased against Christians/Muslims in favor of Muslims/Christians”. Or you can focus on one part of society in particular being against you – for leftists, the corporations; for rightists, the universities – and if you exaggerate their power and use them as a proxy for society then you can say society is against you. Or as a last resort you can focus on only one side of the divide between social and structural power.

Similarity one: people not being totally honest, either from unconscious rhetorical overstating, all the way to justifying playing dirty because you're punching up. Similarity two: This idea of something being overrated or overpriced—when people are perceived to believe/be uncritical toward the weaker, more tenuous claims an ideology makes—this leading people to buy the "underpriced" option. Similarity three: Stances are situated.

In reply tosuspendedreason⬆:suspendedreason

In reply tosuspendedreason⬆:suspendedreasonI think I might want to adapt the Latour discourse-as-battlefield metaphor in "Why Has Critique Run Out Of Steam." When a given side claims too much territory and power for itself, people start turning on it, taking it down a notch in intellectual geopolitics. Critique a kind of assault.

Would it not be rather terrible if we were still training young kids—yes, young recruits, young cadets—for wars that are no longer possible, fighting enemies long gone, conquering territories that no longer exist, leaving them ill-equipped in the face of threats we had not anticipated, for which we are so thoroughly unprepared? Generals have always been accused of being on the ready one war late— especially French generals, especially these days. Would it be so surprising, after all, if intellectuals were also one war late, one critique late—especially French intellectuals, especially now?

Threats might have changed so much that we might still be directing all our arsenal east or west while the enemy has now moved to a very different place.

Most people think they are "speaking truth to power"—theirs is the righteous side, and the opponent vastly outguns them.

fr. an old message:

The actual epistemic territory occupied by a discipline is always highly vague, not just because the field is decentralized and highly populated with diverse views, but because the exact status of even each individual's views is inherently and meaningfully vague. Let's take a case like behavioral economics and JDM, which attempted to rebut the "rational man" theory. What even was the rational man theory? How stringent was it, what cases and in what way would it actually predict behavior? The issues of qualitative scale (how "biased" versus how "rational," which are contested traits in their own rights, human beings are) means the Kahneman/Tversky papers are up against a shadow, and themselves are a shadow in the theory of human behavior they portray. Both sides are sorta right but people end up occupying sides, depending on their departments and colleagues and work, they have "stake" in a position and have natural incentives to help that position claim more ground (attention, influence) in the field.

In reply tosuspendedreason⬆:suspendedreason

In reply tosuspendedreason⬆:suspendedreason@hazard I like "bathwater drain" and "baby catch." They definitely reflect the strong vs weak dynamic, where you might admit that many people take X too far, but you think the core of X is important and undervalued, so you support X. And the other guy goes, well, the reason I'm pushing against X is because people take X too far! (The first is a baby catcher, the second a bathwater drainer, and their goals/"actual positions" are very similar even as they end up on opposite teams.) Speaking of which, I wonder if this is how it happened in the Civil War...

I'm not sure how to think of rebuttal/counter/adjustment/correction/response yet. It could be all one kind of thing or two kinds of things or maybe it's more complicated than that for the question at hand. (Which uh is what exactly...? I'm forgetting...)

What's "Path"?

"Normalization" and "making space for" are definitely in this general ballpark of "transformative beliefs/utterances" (vs one-to-one relationship between core belief and expression)

In reply tosuspendedreason⬆:suspendedreason

In reply tosuspendedreason⬆:suspendedreasonI think part of what I want out of S/C or torque epistemology frame is something better than the cycle of mutual exaggeration and suspicion and increasingly dichotomous thinking than what Toxoplasma of Rage presents. Extremism gets clicks is the main gist I recall from that piece and I don't think it's that big a part of the picture, actually.

Examples are the lifeblood of the inexact sciences, so let's get into examples

Today I had an interaction with a guy from Houston: "I'm from Texas, so you can guess how I feel about the whole pandemic business. Got COVID, got vaccinated, both were a lotta fun." I was struck by how this blase attitude is "subsidized" by liberal over-concern/paranoia (masking young kids, overestimating hospitalization by a factor of 10, etc). One side gets to go, "No one is taking this seriously! It's very serious guys!" and the other side gets to go "Pfffft it's nothing, NBD." They're in a symbiotic relationship, right? If it wasn't over-played there'd be no points to score from being so carefree about it all. (I remember how many points I could score by being carefree about finals week at Columbia, when everyone else was having a panic attack.)

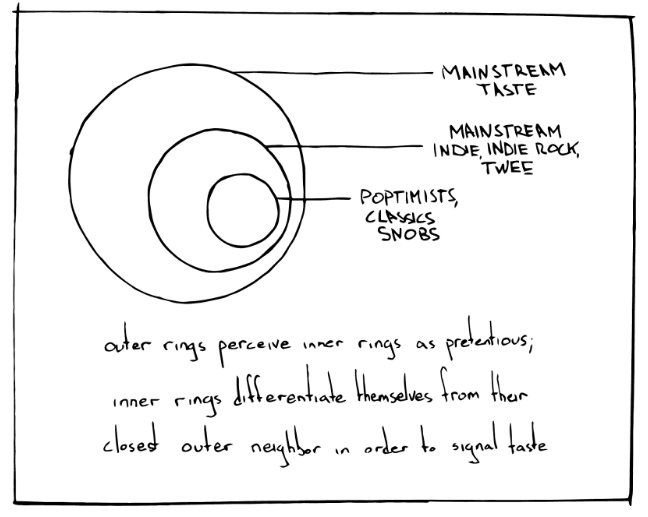

Another example: Top 40-style pop music was seen as garbage, not worth interacting with, by music critics for the 2nd half of the 20th C. Was this position, and the signaling distinction, made possible by the dominance of pop? (An "overrated" ruling masquerading as an in-a-vacuum "worthless" ruling.) And then, when this critical consensus had been around long enough, and the critics hung out together and were all saying the same thing... Some of them started arguing that Pop Was Good, Actually.

Related images:

This is why I wanna nail the interplay between Bourdeausian distinction (make yourself different to capture symbolic capital) and Girardian mimesis (you pick up desires from your social embedding).

In reply tosuspendedreason⬆:suspendedreason

In reply tosuspendedreason⬆:suspendedreason@hazard wrote:

The more I ponder this, the less clear it is to me that I have ever cared about an actual fraction when I have made a quantifier/generalizing statement. Well, at least in the context of things that end up as arguments with people. Like, take "more often than not", and a sentence like "more often than not, a disagreement is rooted in miscommunication". The most straightforward denotational meaning I can draw from that is "in over 50% of arguments, the root cause is miscommunication" and the only way I can think of rn to turn that into something else meaningful is "if I know nothing about an argument, begin approaching it as if it was caused by a miscommunication" and then I notice, wait a god damn fuck.... "if I know nothing about an argument"? If I'm in an argument, I know something about it! I know a lot about it! Why would I care about a zero info probabalistic guess?

I wanna push back on this, because I think a lot of arguments are over generalizations like this and do seem to matter. Let's take the metis vs episteme example: rationalists like Scott are trying hard to figure out how often top-down intervention-from-afar backfires, and how much respect should be accorded to local wisdom. I think that high-level orientation matters, or seems to—even if, in a given situation you have considerable information about the specific problem/solution at-hand in a potential intervention. If you don't think this kind of high-level generalization really matters, that would be interesting but radical—it implies that the entire metis/episteme discourse is unproductive, right? And even if you went into increasing fidelity ("In a situation where the top-down intervening party satisfies X criteria, and the local knowledge satisfies Y criteria, intervention is usually good") these generalizations would still be unproductive in your frame. And since generalization/indexicality is a spectrum... well where does it end? At what point do generalizations become important?