Alex Boland: Narrative Engineering

Discussion post of Alex Boland’s “Narrative Engineering”. A lot of provocative stuff in this essay for us. Markets as anti-inductive. Godel and completeness. The role of expert intuition. The validity of models.

Complexity vs engineering

He starts out with the Three Body Problem (skip if you’re already familiar, otherwise it’ll set the scene):

Imagine a planet orbiting a star, nothing else in the picture, at all. This is the whole universe. Only having to take these two things into account, it’s possible to write up a mathematical equation that reliably describes their trajectory; in time, as they pull on each other, they will eventually settle on a predictable stable pattern. Now, let’s say that planet has a moon. Now everything goes to hell: this doesn’t just make the problem harder, it makes it literally impossible. Poincare proved that there exists no equation to describe this situation, not least because there is no ultimately stable long-run pattern. Eventually, even the tiniest difference in the initial positions, speeds, and masses of these celestial bodies will dramatically change the outcome, which means that even if you make a computer simulation of this, you can’t ever fully predict it because your inputs will have a finite number of digits and will have to be ever so slightly different than the actual initial conditions.

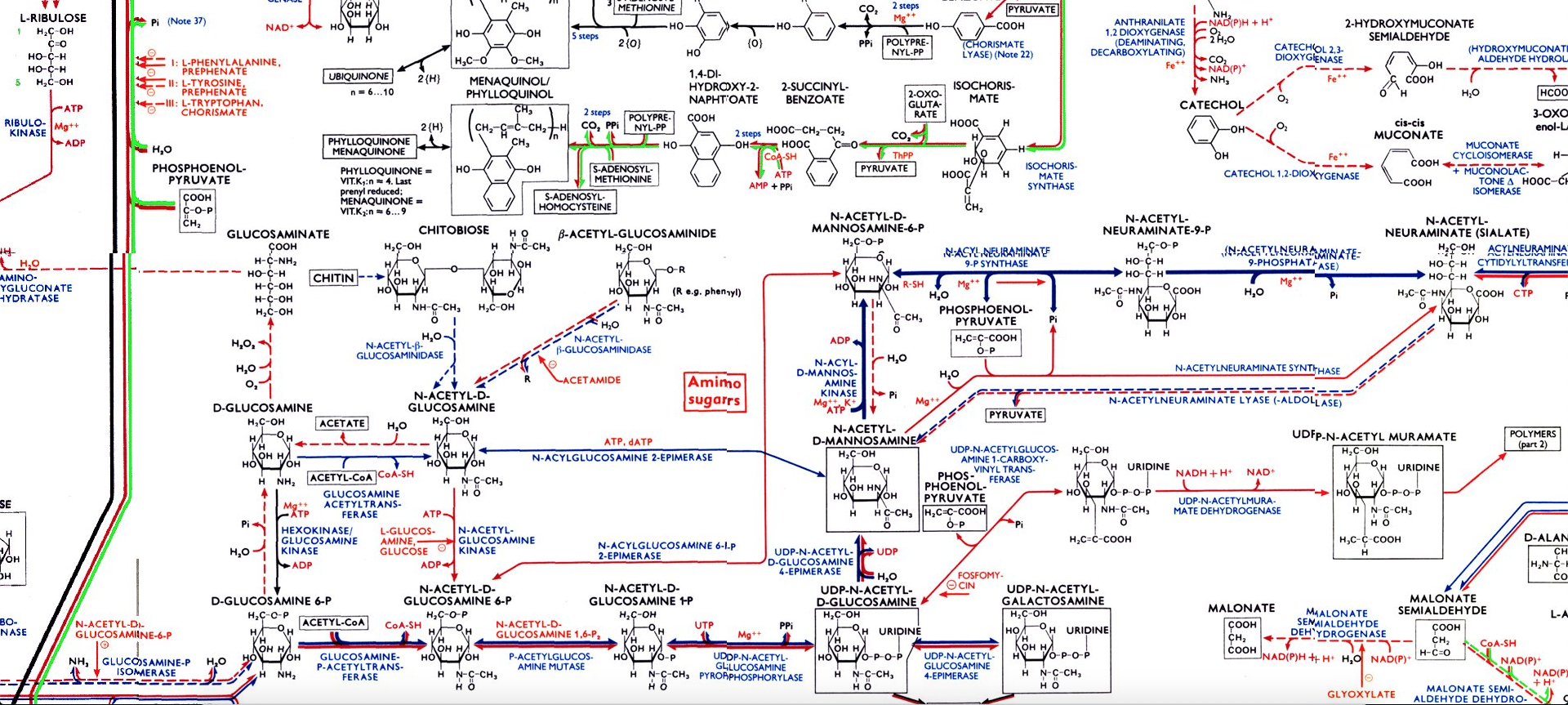

Then he shows us a massive diagram on known metabolic pathways in the body. The full image is so large it won't render on our forum, so I've snapshotted a very small subsection for flavor:

Boland casts this as a parable for complexity at large, cf. all the chaos theory stuff about butterflies: minute differences in starting conditions radically alter the end state. And yet, despite all this complexity, engineering more or less works:

in a given year there are tens of millions of flights but only around a dozen crash, diseases get cured, vast amounts of goods are reliably transported across the world at ever increasing volumes. None of this was ultimately accomplished IKEA-style from textbook theories; it was the result of relentless tinkering and trial and error, the work of engineering. Engineering is messy, it has no explicit rules and its approximations are sloppy to degrees that make theorists spit out their coffee.

The view of engineering he espouses feels aligned with the pragmatic prejudices of this board—his line that “adding negative numbers, imaginary numbers, and so forth allows the mathematician to do more,” separate of ontological questions about whether imaginary numbers “exist,” could be straight out of Crispy’s Concepts are Tools, not Artifacts frame. But I’m surprised by his splitting off of engineering from prediction:

engineering, isn’t about getting predictions right or wrong, and it’s most definitely not about establishing a scientific claim as unequivocally True or False, it’s about doing what works, of which models, predictions, experiments, and falsifications constitute an invaluable part but are not the essence of it.

We’ve talked before in the server about high-level abstractions as angels and demons on our shoulder—how opposing ideological stances can both seem reasonable in the abstract—e.g. Chesterton’s fence vs. vestigial features, or the benefits of external oversight vs the legibilizing costs. Neither conservatism nor liberalism is the “correct” orientation globally, rather, in some cases, a bias proves more effective as a guiding star than its opposite might have. The only way I know that progress can be made on these issues is by drilling into the relevant differences—which cases are more amenable to one approach over the other, what at the relevant distinctions and categorizations to make. I think Boland would agree:

At this point it would be fair to suggest I’m playing word games, so let me put it another way: there is a difference between a model seeming like it works but then turning out to be bunk, and a model actually working but just not everywhere.

Newtonian mechanics weren’t “wrong” in some binary so much as they were incomplete; they correctly mapped most cases humans cared about, and worked just fine for architecture or artillery fire. To my mind, a “model”—which predicts—and an engineered solution—which “works”—are the same in this regard. Given a specific subset of the overall space of possibles—those state conditions which are most likely or common in a domain, what we might call “the normal state of affairs”—the general relationship between input and output, intervention and effect, holds. Of course, as human activity drifts, and the environment changes, this state of affairs, and the demands we put on our tools, changes in turn.

Boland states as much: “there’s no such thing as a universally valid model,” and “new models exist because of the need to fill in the holes left by previous models.” Still, for all his distinctions of engineering and models, and given his understanding of conflict as a driver of continuing adaption, I don’t see why the same can’t be said about engineering. After all,

Just like science utilizes models that work well enough, people, and for that matter organisms in general, manage to do things that simply work well enough for their purposes.

Perhaps the distinction I can best make sense of is that models are “a middleman, not the engine [of knowledge production], and for that matter not the end goal either. The end goal always has been and always will be efficacy.”

(Boland uses a term I like a lot here, tractable habit, to describe efficacious behaviors that stick around because they’re efficacious.)

I’m intrigued by his discussion of homomorphism and congruence, concepts with which I wasn’t familiar with before. There is some overlap with alignment problems (AI Alignment Forum folk would call strategic appearance of congruency “deceptive alignment”):

A model that is congruent with its subject is one that’s capable of anticipating its behavior. The problem is that a model can appear to be congruent until it doesn’t.

Lessons for the Inexact Sciences

I think Boland would be a valuable guy to have around the board, if he’d be willing. There’s a lot he clearly understands about science, inference, and language which we have been stumbling toward on our own. The essay contains some gems about the pipeline from inexact to exact sciences, as well as the construction of concepts.

From the essay:

Fields like physics might have precise measurements, well-known natural laws described by powerful models, strict codes of protocol; but these a priori rules didn’t just descend from the heavens, we were only able to get there by improvisationally seeking out pockets of order within the chaos and gradually refining these practices into increasingly universal structures.

Rigor follows from the maturity of a practice, when ideas and conjectures finally converge on some pocket of stability.

And on language problems (related to a recent Amirism post):

there’s no bucket holding all the models that you can just stick your hand in, you have to construct a model, which means picking relevant attributes. Of course, attributes themselves are not things that can merely be picked out of some bucket, they are defined relative to other concepts, which themselves are defined relative to other concepts, and so forth. The positivists claimed that all this eventually settled on an absolute ground of “sense data,” from which everything else could be built up from self-evident foundations, but this too is completely smashed to bits by Godel’s proof that you can never have enough axioms to say anything.

Pfeilstorch and extraordinary science

When he gets to Thomas Kuhn, I think he really starts to define what’s exciting about this moment in time, intellectually, where old regimes are proving inefficacious, and old paradigms are unable to make progress:

As long as [the dominant] paradigm continues to produce promising results, it is in a phase called normal science in which scientists contribute incrementally by essentially solving puzzles inscribed in stable rules that continue to work. There eventually comes a time, however, where the paradigm starts failing to produce any new results, either failing to predict what it ought to be predicting or simply finding no new applications or even relevant questions.

Such sluggishness inevitably hurtles into crisis as the paradigm grinds to a halt and the people and institutions involved enter a phase known as extraordinary science: a process of Bricolage in which disparate concepts from all kinds of unconventional places are connected in novel ways to create the foundations of a new paradigm.

What is most worrying to Boland is when there is a narrative monopoly or hegemony, in contrast to a “rich ecology of narratives.” For one, this means an impoverishment of materials when the time for bricolage comes. For another, this means that said monopolies end up extracting rent, as in the case of successive generations of academics enforcing their own paradigms on their graduate students in order to bolster their own intellectual legacy:

If a paradigm fails to serve any purpose beyond socially or financially validating its constituents, the only way it could be surviving is through rent-seeking.

...monopolies on social capital… cause the overall narratives themselves to be disproportionately owned by the few and subject to rent-seeking, with certain narrow institutional paths being the only way offered to the inquisitive for finding any kind of narrative in which to play a part.

Probably his biggest challenges to our project are (1) his criticism of theory (2) his argument that “further progress [cannot] get made by mechanically extrapolating from existing formalisms.” I also felt, after reading, that for all our lip service to functional pragmatism, or subsuming taxonomy to telos, we haven’t articulated clear engineering goals for all this knowledge we’re consuming and producing.

I’ll wrap up with a bit about tinkering and abduction, which feels aligned with some of @crispy’s thinking on inference and pfeilstorchs. Boland goes on, in the second half of the essay, to discuss narrative open-endedness, play, and the generation of possibilities—but I’m still trying to grapple with what arguments he’s advancing, and how the parts cohere.

Nor does further progress get made by mechanically extrapolating from existing formalisms: science is inextricably enfleshed in tinkering. However many abstractions there are, scientists still have to do the experiments by interacting with the material: they operate instruments, pass down and receive folk wisdom, have conversations, and most illustrative of all, they understand where corners can (and must) be cut; which is why Popper was right to understand that an errant observation here or there can’t be considered an inherently damning thing. All this is why despite all the torrential downpours of data that amount to nothing, a revolutionary theory often comes with just a little bit of data. More simply isn’t better, data without abduction is as inert as a hammer without a hand.

suspendedreason

suspendedreasonRelevant: a recent thread by @the_aiju on Twitter

the biggest mistake that follows from a reductionist worldview is MASSIVELY underestimating just how complex everything is

engineering is actually prior to science, not the other way around! — forcing the world to a lower complexity using engineering is the first step to scientific discovery

astronomy is a notable exception to this — the heavens are surprisingly low complexity from our point of view! — and unsurprisingly the earliest science to fully develop

In reply tosuspendedreason⬆:hazard

In reply tosuspendedreason⬆:hazardNewtonian mechanics weren’t “wrong” in some binary so much as they were incomplete; they correctly mapped most cases humans cared about, and worked just fine for architecture or artillery fire.

On this point, I remember being really wowed when I first heard about parameterized post-Newtonian formalisms. I don't have the physics or math chops to fully get it, but my understanding is that there's a way that you can expression spacec-time equations such that newtonian theories are just einsteinian theories, but missing a few terms. In other words, Newtonian gravity is literally an approximation of Einsteinian gravity.

It's just that in both of their original forms, they looked so different that you couldn't see a clear connection between them. It's funny, a paradigm shift (einstein) needed to happen to move past newton at all, even though the two theories aren't inherently different. Oh, or maybe a better description (my lack of understanding is shining throuhg) is that space-time is a way more on point paradigm than gravity was, so you needed the paradigm shift, and then eventually people were able to understand the old formulations in terms of the new paradigm.

In reply tosuspendedreason⬆:hazard

In reply tosuspendedreason⬆:hazardAt some point I wanna do a big ol post on the expansion of the concept of "numbers" over history. It's really cool, and I think it sheds a lot of light on the interplay of aesthetics, experimentation, and rigor.